Guard Your Data, but Don’t Imprison It: The Benefits of Data Openness for Utilities

The most valuable utility of tomorrow will be the one that knows how to guard its data while simultaneously pursuing the benefits of data openness.

After decades of only having to think in terms of physical assets, utilities have largely come around to the idea that data can be as valuable as any transmission and distribution assets on their balance sheets.

And as providers of critical infrastructure, it stands to reason that we must hold data close, and tightly guarded from outside eyes and interference.

There’s truth to that, but significant risk as well. Although data security is essential to a modern utility, locking data down altogether threatens to bar utilities from significant upsides.

The most valuable utility of tomorrow will not be the one that pours its capital purely into its own data collection and analysis. It will be the one that knows how to keep an eye open to new, alternative sources of data and analysis that can be leveraged to improve its operations, and knows when to selectively allow data to leave its own four walls and flow to value-adding partners.

Wide data for lean operations

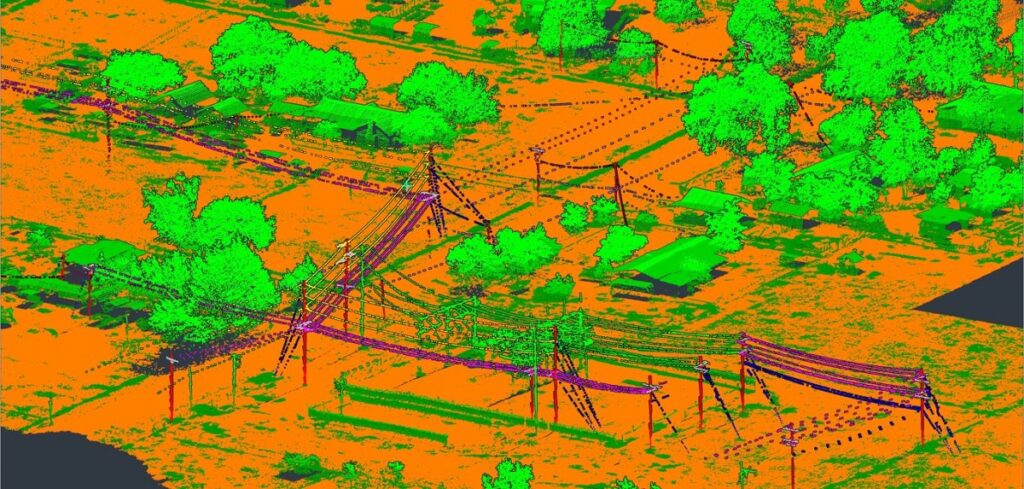

An easy mistake is to focus too narrowly on data that has proven valuable in the past. For example, at Sharper Shape, we specialize in collecting aerial powerline inspection data by drone and helicopter. We work with our utility customers to create a digital twin of the powerline, building an intimate picture of its status using the likes of LiDAR, hyperspectral and HD RGB imagery. In combination, this creates an incredibly data-rich model of the asset, allowing for deep analysis and planning of inspection and maintenance activities.

However, it takes time and money to fly these data collection missions (at least until automatic drone data collection becomes more widespread). And while it’s tempting to want the very best data possible every time, it’s not always necessary to conduct full aerial inspections at close intervals.

Instead, once the initial full inspection has been conducted, a utility can cost effectively access satellite data for an up-to-date status check on its powerlines. This will not provide the same angle of views or granularity of drone-collected data, but it is more than sufficient to alert the utility to major changes between full inspections, such as vegetation encroachment or other significant changes in the corridor environment. In this scenario, being open to different third-party data sources can augment current capabilities and enable a leaner operation.

New data brings new correlations

Particularly innovative and integrated transmission utilities can go a step further and think more creatively about incorporating different data sources.

For example, while satellite data can be considered a close cousin to aerial inspection data, slightly more remote relations also can be considered, such as fault or power quality event history, or weather data.

Consider a scenario where vegetation management data is combined with third-party weather data provision to predict risk from upcoming storm activity. The utility may then be able to more intelligently prioritize emergency inspections ahead of the weather event to minimize risks to people and property, and to recover power distribution as soon as possible. Likewise, if an area on the network has a troubling recent history of power faults, engineers from across different departments of the utility may be able to quickly sense-check aerial inspection data to rule out obvious physical causes before dispatching a team to investigate.

Bilateral data flows

The smartest approach to data won’t be purely inwards. Tomorrow’s leading transmission utilities will intelligently share their own data with third parties to drive value.

Of course, security is paramount. Transmission utilities are critical infrastructure operators, and talk of open data is inappropriate. However, the world is moving beyond the point where all data can be kept closely in silos.

To protect security, data must be shared only with thoroughly vetted and trusted third parties; but once due diligence is done, it can be invaluable. For example, sharing data can enable more informed tendering processes for third-party service providers, allowing them to submit their responses based on a real-world understanding of the utility’s challenges. Particularly pertinent applications might include arborists for vegetation management contracts, or post-PSPS (public safety power shutoff) management companies, giving them a prior insight into the state and requirements of the grid.

Revisiting data and repeat analysis

Trusted third parties can do more than help analyze and act on data in real-time. They also can be used to revisit older data for new insight and value.

By storing data in the cloud with modern application programming interfaces (APIs), utilities can allow for new inspection and analysis algorithms to be plugged in as they are developed. The great thing about artificial intelligence (AI) is that it improves as it is trained over time; therefore, new patterns and issues might be identified in older datasets, even if they were robustly analyzed at the time.

Having time-series data accessible to the growing AI library allows for change detection and prediction over time. The same data can be analyzed many times with iteratively improved algorithms. This would not be practical if all data was kept inaccessible in-house.

Enabling data infrastructure

Safe, effective and appropriate data openness is easier said than done, however. What type of data infrastructure and IT architecture enables this? To start with, it must have a few key characteristics:

- Cloud-based: With the security concerns of early cloud-based infrastructures largely resolved, utilities are beginning to take tentative steps towards cloud-hosted data platforms. This is critical for extracting maximum value from owned and third-party data, as it enables outwards sharing of data for public tendering and smooth import of external sources.

- Data agnostic: By now it’s an old adage: garbage in, garbage out. In other words, there are no good outputs without good data inputs. However, while utilities should be choosy about data quality, the best system will accept and collate good data of any type and from any trusted source.

- Trusted and secure: Security is a moving target. Best practice yesterday may be compromised tomorrow. One simple fact of doing business in today’s world is that utilities must keep pace with the latest in cyber security provision and best practices – especially when data is being shared externally.

However, one characteristic that is not essential for a safe and value-adding approach to data is that the core data platform is held in-house. First instincts may dictate that holding the platform as closely as possible is best for security and for deriving value, but that is not necessarily the case.

In fact, it may be that specialist third parties have the expertise and experience to deliver the most effective data platform. This is certainly our philosophy behind the Sharper CORE platform. Demand for top-tier tech talent outstrips supply, and it’s doubtful there is enough AI, machine learning and cyber security talent in the energy industry for every utility to effectively (and securely) build its own platform independently.

Once again, it comes back to trust. The most crucial element in any approach to third-party data is to find a partner with the track-record, integrity and aligned incentive to deliver a secure platform and maximum value creation.

Petri Rauhakallio is the Vice President of Customer Operations at Sharper Shape.